Redshift Integrations with Apache Spark

Demo Video

Before you Begin

Use the following CFN template to launch the resources needed in your AWS account.

The stack will deploy Redshift serverless endpoint(workgroup-xxxxxxx) and also a provisioned cluster(consumercluster-xxxxxxxxxx). This demo works on both serverless and provisioned cluster.

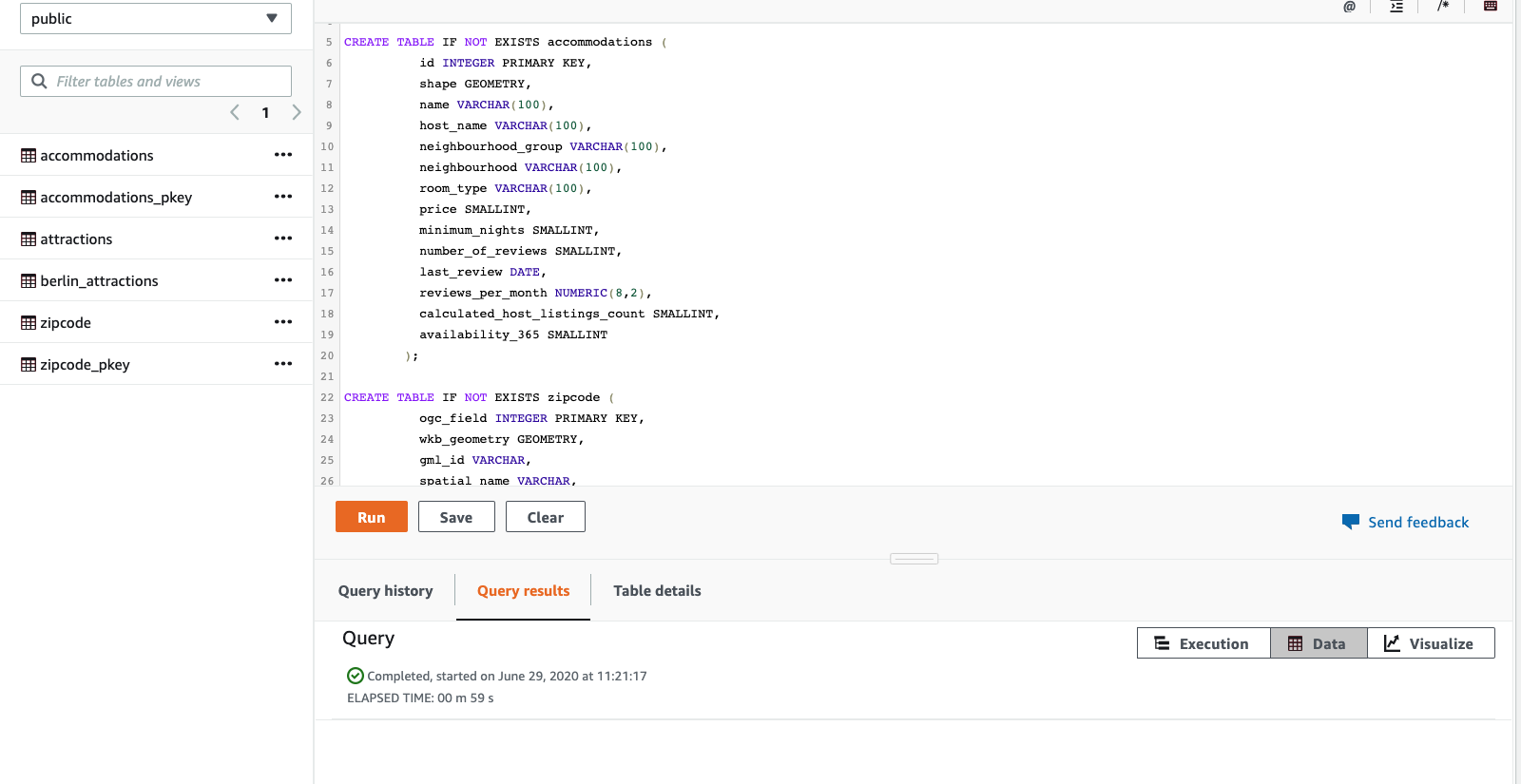

Client Tool

This demo utilizes the Redshift web-based Query Editor v2. Navigate to the Query editor v2.

Below credentials should be used for both the Serverless endpoint (workgroup-xxxxxxx) as well as the provisioned cluster (consumercluster-xxxxxxxxxx).

User Name: awsuser

Password: Awsuser123

Prerequisites:

- Need EMR provisioned server with release version of 6.9.0 or higher with applications pig 0.17.0 or higher , Jupyterhub 1.4.1 or higher, JupyterEnterpriseGateway 2.6.0 or higher and Spark 3.3.0 or higher.

- Create EMR note books (pyspark module) and update livy configuration file to connect to EMR cluster.

- Redshift serverless instance.

- Test pushdown for join, filter, aggregate & sort

- Get the last query executed

- Test pushdown for distinct & count

Test pushdown for join, filter, aggregate & sort

| Say | Do | Show |

|---|---|---|

| Create spark dataframes to test pushdown for join, filter, aggregate and sort |

Create new cell in your EMR note book and execute below

|

|

Get the last query executed

| Say | Do | Show |

|---|---|---|

| Get the last query executed on Amazon Redshift with query_label = ‘spark-redshift’ |

Create new cell in your EMR note book and execute below

|

|

Test pushdown for distinct & count

| Say | Do | Show |

|---|---|---|

| Create spark dataframes to test pushdown for distinct and count |

Create new cell in your EMR note book and execute below

|

|

|

|